Let’s start this intro a little differently – there’s a lot of time and effort that goes into these post from me, and importantly from the wonderful people I’m chatting with. I want that time and effort to be worthwhile for the person I’m chatting with, so it’s important the blog reaches more people.

With that in mind, before you read, please subscribe.

Now onto the conversation, it’s AI, but not as you know it!

Joe: Hey Alan. Thanks for agreeing to this chat. Can you start off by telling me a little bit about you and the games you’re working on?

Alan: Hi Joe, sure thing! And thank you 🙂

I did some soul searching during COVID because there was more time to think. I wasn’t enjoying my job because it didn’t involve much creativity and there were too many levels of abstraction between me and the real world. As a kid, I loved playing board games and card games with my grandmothers and I thought, why not design one? I quit my job, got a part-time one as a chef to pay the bills and 3 years later, my first game got picked up by Bordcubator: ‘Posers’, a light-weight party game (fake-artist goes to New York mixed with charades).

I have lots of games on the go, to name a few: Arrrrggghh Mutiny (iterative social deduction with shifting allegiances), Arrrmada (unique breath dexterity game), Composure (sharing music tastes and interpreting images)…the list goes on. My current project is War of the Toads, which I intend to self-publish. It’s an 18-card game with duelling samurai toads for 2 players. Doing everything myself, illustration, graphic design, development etc, has opened my eyes to how the industry works and hopefully someone will employ me specifically for those skills!

Joe: that’s a brave step to take – how did you find that transition and what’s been most challenging along the way?

Alan: It felt like the right choice and it was scarier staying in the same job that wasn’t creative than charting a new path. I had some money saved up because of COVID, so that helped quite a bit. I’m fairly sure many people did something similar to me because they were in a similar position.

What’s challenging? Working as a chef means your shifts can be on the weekend, when friends want to hang out, and the pay isn’t great. But there are benefits: breakfast, lunch and dinner provided. Not starting til 10am so I don’t have to travel during rush hour. Doing two 12 hour shifts a week means my part time job is done in two days meaning I have plenty of time for boardgame design!

Joe: When we take the step into this indie games world, there’s an overwhelming amount to understand and learn. You listed a few elements that you’re developing your skills in: illustration, graphics, development. What do you do to develop you skills in those areas?

Alan: Totally agree. First and foremost, I playtest with others. This is my bread and butter. I’ve probably playtested more of other people’s prototypes than published games at this point which helps to compare the gap between published and unpublished games.

At the beginning there were so many things wrong with my games that my first question to playtesters was “what is getting in the way of you understanding my game: graphically, or with my explanation or incongruence with the theme etc”. And people told me, so I corrected them bit by bit. New graphic design, an updated theme, player aids or a clearer demo. Now I have a fairly decent understanding of the basics but I never stop asking those questions.

For development, the thing that helped the most was a mind-shift. I stopped caring about proving myself and started caring deeply about my audience. Each player’s experience is valid and ultimately I’m creating games for other people. If the experience I had in mind when I designed the game wasn’t consistently matching reality, then it’s up to me to tweak things until it’s right. Feedback is essential for this.

I stopped caring about proving myself and started caring deeply about my audience

Joe: I sometimes feel that designing a board game is like sculpture; there’s an iterative process taking place that slowly but surely reveals a game.

You mentioned recently in a blog post that you’re using AI to support your game development – can you tell me a little about how you’re using it?

Alan: Sure! Before you get nervous, this isn’t generative, exploitative AI. This is your standard “programme the rules and have bots play the game” like chess bots. There is no training data needed but, as you can imagine, it takes a lot of work to do. Sort of like programming a game onto Board Game Arena and gathering tons of data from human playtesters. Except bots play using a form of Monte Carlo Tree Search.

I was approached by the team at Tabletop R&D, a spinout from Queen Mary University London, several years ago to be a guinea-pig to help research whether gathering that data from those bots would assist boardgame designers in their design and development journey. Turns out they were right and they made me a co-author on the paper called “A case study in AI-assisted board game design” by J Goodman, Diego Perez-Liebana, Simon Lucas & Alan Wallat.

The abstract summarises the work well:

“Our case study supports the view that AI play-testing can complement human testing, but can certainly not replace it. A core issue to be addressed is the extent to which the designer trusts the results of AI play-testing as sufficiently human-like. The majority of design changes are inspired from human play-testing, but AI play-testing helpfully complements these and often gave the designer the confidence to make changes faster where AI and humans ‘agreed’.”

Joe: That’s super interesting. Can you tell us a little bit about the game that was AI playtested? And about a Monte Carlo Tree Search?

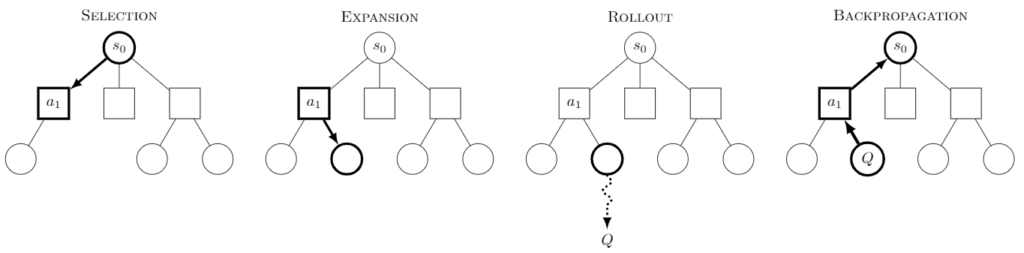

Alan: Game Trees are maps of all possible paths you can take playing a game from beginning to end. The more complex a game, the more branching paths there are.

You might use Tree Search to ‘solve’ a game: what action can I take now, that leads me down a branch to victory? If you work backwards from your victory to where you are on the tree, you know which is your best decision to make. This sort of Tree Search was the basis of the AI used to beat the World Chess Champion in 1997.

Monte Carlo Tree Search randomises some decisions down the path to focus the search on parts of the game that look more likely to occur. This means it can search deeper into the future than classic Tree Search, which explores all parts of the Game Tree equally, so it can often play better moves. It is also able to cope better with games that have random elements, such as dice or cards.

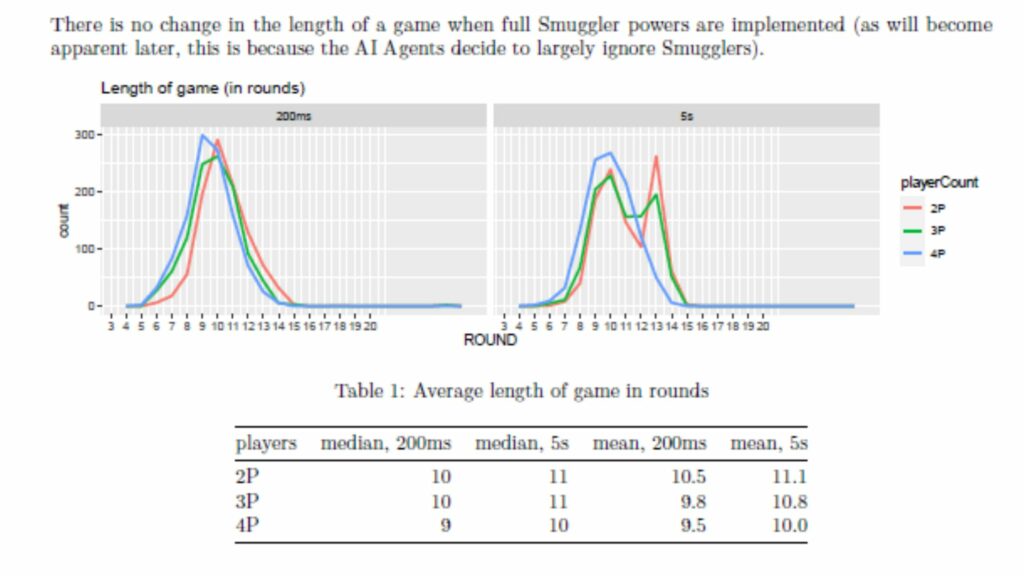

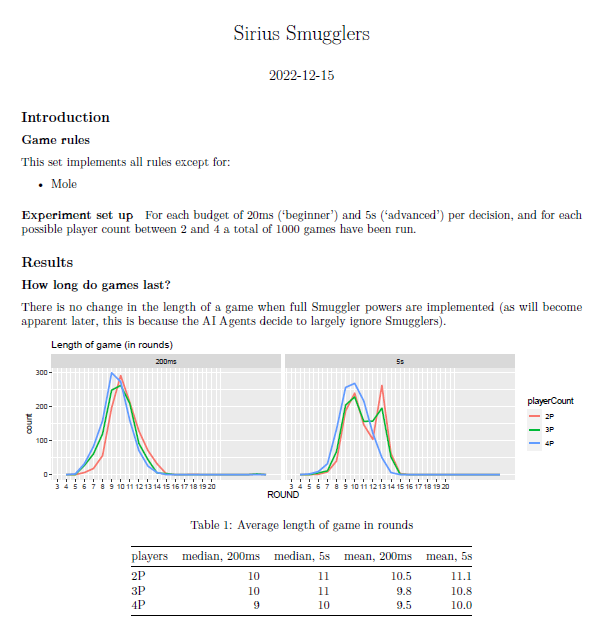

The game that was AI playtested was Sirius Smugglers, a simultaneous space-themed game where players landing on the same planet have to split the resources there. Players with a higher underworld ranking got to perform actions first, e.g. loading cargo, selling cargo, paying and betraying crew. Here’s the case study if you are interested: https://www.tabletopR&D.co.uk/ukge23/sirius

While the game had a fun core loop, it had problems scaling with different numbers of players. I was also earlier in my design career and it was an ambitious project. I plan on returning to it once I have finished work on War of the Toads – a tightly designed duelling card game for 2 players using only 18 cards. Incidentally, it is also being tested by Tabletop R&D’s AI and has some very promising feedback from human playtesters too!

Joe: So once you’ve run your game through the AI, what sort of feedback do you get? How do know how to integrate the information into your next version?

Alan: A great example of this was the infinite game in Sirius Smugglers. The AI agents refused to sell their Ammonia once they realised they wouldn’t win. Game end triggers when the Kingpin’s quota is met, so effectively they continued playing to infinity and beyond to avoid losing. Once we increased the Ammonia available this was no longer a problem.

For War of the Toads, I was worried about luck and an imbalance defending first as Blue Hills. The AIs were given different computational budgets to simulate different skill levels—e.g. 1 millisecond and 2 milliseconds. The AI with more time played better, since it could analyse more paths. This didn’t change anything but gave me confidence in the game itself.

The data also showed a slight advantage for a player defending first. So rather than Blue Hills always defending first each round, we changed it to whoever wins Round 1 starts attacking Round 2. This balanced the win rates.

On a separate note, it has also helped with rules writing and graphic design. Human players have only once had a problem with resolving the Toad Tactics simultaneously. For AI there has to be a sequence when this occurs. Tabletop R&D suggested placing small graphics on the tactics and a note in the rules to eliminate any player confusion.

Ultimately, like good playtesters, Tabletop R&D ask what parameters I’m interested in before testing. It’s really up to the designer to know what they want to get out of it.

Joe: Thanks for the examples, I was worried it was all numbers you got out of the data, but it looks like there’s really meaningful ways using the data.

Alan: Just out of curiosity, what would you test in your game with the AI if you could?

Joe: For Drop Zone, probably two things. The game has had heavy playtesting at 2 players (it’s easier to find 2 people, than 4), so I’d be interested to see how the players’ final scores pan out with higher players.

Secondly, I have two win conditions – first to 30 reputation, or first to complete the final mission. These seem pretty balanced, but I feel like AI might be able to point to one or the other being the more favourable path to follow.

Final question, is this something that people can start using to test their games? Or does it require a level of skill, or relationships with AI academics and programmers to do? And… if people are intrigued where should they look next?

Alan: Haha, it is pretty much numbers, but the Tabletop R&D guys interpret the data for me into useful graphs or summaries.

If you can programme yourself, then there is the GitHub page for the TAG framework used by Tabletop R&D. I am not a programmer so I think it requires a lot of skill but I have not tried. The code is publicly available and maintained by the Games AI Research Group at Queen Mary, University of London. Check it out at: https://www.tabletopgames.ai

But I do not recommend it early in the design process. The issue is that boardgame designs change rapidly and designers are willing to scrap concepts or mechanisms entirely. The time and energy and money it can take to programme a game only for the designer to scrap something is very costly. So don’t do it at the beginning.

However, in the future I expect it to become very useful for publishers testing games internally before shipping a product just to iron out any unforeseen issues.

Wasn’t that brilliant?! You can find Alan on Instagram if you want to reach out and ask him some questions! The subscribe link is below – drop your email in and get notified of the next post!

Wow! That was really interesting! Many thanks to you both! Another great post Joe 🤩👍